Phased Implementation of the AI Act: Key Milestones [1/10]

This article is part of a thematic series of 10 analytical publications on the legal challenges raised by artificial intelligence and the responses provided by French and European legislators.

Brief Overview:

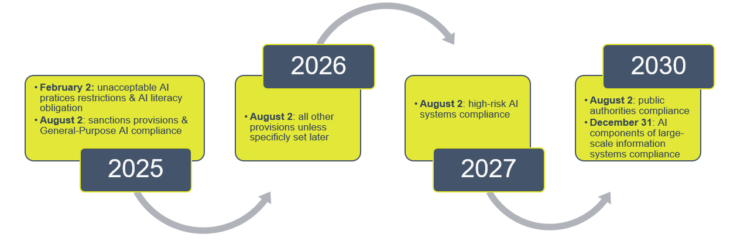

The AI Act’s implementation is phased over several years. Key milestones go from enforcement of provisions banning AI practices deemed unacceptable in February 2025 to the final compliance deadline for high-risk AI systems in 2030. Operators should begin compliance efforts now by implementing internal training programs and risk assessments.

It is crucial for any company whose activities fall within the scope of Regulation (EU) 2024/1689 on Artificial Intelligence (“AI Act”) of June 13, 2023 – whether as a provider, importer, distributor, authorized representative, deployer, or product manufacturer – to stay informed about the timeline for its phased implementation.

On February 2, 2025, the European AI regulation reached its first key milestone with the entry into effect of two essential provisions:

- The prohibition of AI practices deemed unacceptable due to the risks they pose to European values and fundamental rights. This prohibition notably covers harmful AI-based manipulation, deception, exploitation of vulnerabilities, and social scoring (Chapter II).

- The obligation for AI providers and deployers to ensure sufficient AI literacy of their employees and any individuals interacting with AI systems (Article 4).

Shortly before August, 2025, the Code of Conduct for General-Purpose AI, of which European Commission has already published several drafts, will take effect, giving AI providers a framework for compliance.

On August 2, 2025, additional key obligations will come into force:

- The designation of each national authority (Chapter III, Section 4) and the establishment of national and EU-level governance structures (Chapter VII).

- Compliance obligations for General-Purpose AI (Chapter V).

- The enforcement of sanction provisions, except those specific to generative AI (Chapter XII).

On August 2, 2026, all other provisions of the regulation will become applicable unless a later date is explicitly set (see below). This includes mainly core obligations related to high-risk AI systems (e.g., conformity assessments, quality management systems, post-market monitoring) and transparency requirements for certain AI systems (such as those interacting with humans or generating deepfakes).

On August 2, 2027, high-risk AI systems listed in Annex I (incorporated within certain regulated products such as medical devices) will be subject to the compliance obligations outlined in the AI Act. Additionally, General-Purpose AI models placed on the market before August 2, 2025, must comply with the regulation (Article 111).

By August 2, 2030, AI providers and deployers of high-risk AI systems intended for use by public authorities must have implemented compliance measures.

The final step of the regulation’s implementation will occur on December 31, 2030, when AI systems that form components of large-scale information systems listed in Annex X and placed on the market before August 2, 2027, must be brought into compliance (Article 111).

AI system operators should begin their compliance efforts immediately to avoid being caught off guard as applicable provisions come into force. The European Commission emphasizes that early, context-specific preparation is essential. Literacy is just one part of a broader compliance strategy that should reflect the organization’s role, risk profile, and operational reality. Measures such as role-appropriate training, internal policies, and risk assessments should be carefully designed and extended to relevant contractors and service providers. Even before formal enforcement begins, gaps in literacy or preparedness could weigh negatively in broader investigations or regulatory action..

However, in light of complaints and criticism from the AI industry and experts, the European Commission is currently discussing a pause in the implementation of the AI Act. These challenges stem from the absence of accompanying texts that were intended to clarify and harmonize the interpretation and application of the AI Act. These texts have not yet been developed and published.

In this regard, there has been a delay in the development of technical standards, and the Code of Good Practice for General Purpose AI, prepared by independent experts, was not published until July 10, more than two months after the scheduled date. It should be noted that it hasn’t been approved yet by the EU Commission, which has not yet developed the related guidelines.

Given the uncertainty surrounding the AI Act, which also provides for significant penalties, many players in the AI industry are calling for the further entry into force of the Regulation to be postponed and for it to be clarified and simplified. To date, a postponement is still being requested but remains under consideration. The publication of the Code could be interpreted as a reluctance to pause the application. However, it is essential to strike the right balance between respecting the schedule and clarifying the regulation.